Leaking secrets logarithmically

Previous blogs in this mini-series:

It should be noted here that the first two of those are essentially required reading if you want to understand the algorithm explained here. And if you don't then just reading the first two introductory parts will suffice to give the general flavour.

The third is not related to this mathematics (it's only a possible application of it).

Problem statement:

How can we form a ring signature over a set of \(N\) public keys, which is based on the ECDLP, and whose size of signature is logarithmic in the anonymity set \(N\)?

The context

Now we are going to flesh out the main body of the paper How to Leak a Secret and Spend a Coin by Groth and Kohlweiss.

Historical note: How to Leak A Secret was the title of the "OG" ring signature paper by Rivest, Shamir and Tauman c. 2001 (although the essence of the concept probably goes back to Chaum and his dining cryptographers, much earlier). The 'leak' concept is a bit confusing: in order to leak a secret (like a government or trade secret), you form a cryptographic algorithm to specifically not leak a different secret: i.e. which key signed the statement. So in this new Groth-Kohlweiss algorithm, we are doing that but:

... the paper's main contribution (its secondary contribution will be explained in the next blog) is to show how, by using bit decomposition, you can create a ring signature that scales logarithmically, using only an assumption of discrete log hardness.

As an example, this means in practice that creating ring signatures of size about 3kB for 1-2000 public keys from a curve like secp256k1 is completely feasible. As an example, see this ring signature on 2048 signet taproot utxos, which will be expanded on in the next blogpost.

It's also worth mentioning present day research in this area; see the Triptych paper by the Monero research team, which, as far as I know, is an attempt to expand on and optimize the idea presented here.

With AOS style ring signatures, explained in detail in an earlier blog, this kind of ring signature might require 30-60kB of space. The comparison would of course be even more favourable at larger anonymity set sizes, albeit the computational load would be non-trivial.

Th relevant section (3) of the paper is actually titled "Σ-protocol for one out of N commitments containing 0"; so not directly a construction for ring signatures, per se. But as we will see, we can get that trivially from this initial goal. That is, we want to prove that from a set of commitments \(C_0, \ldots, C_{N-1}\), we know an opening of one of them to the value zero.

The main, and ingenious idea, is to decompose the position of the 0-commitment, i.e. its index, in the list of all commitments, into bits, and then use the trick of how to prove that a set of commitments are all commitments to bits (as was explained in the earlier blogpost. It's that first bolded part that's profoundly unobvious.

The line of reasoning starts from a curiously trivial observation: if \(A_3\) is one entry in a list: \(A_1, \ldots A_{10}\), then:

\(\sum_1^{10} \delta_{i,3} A_i = A_3\)

Here \(\delta \) refers to the Kronecker delta function. A simple enough object, that just happens to be rather useful in mathematics sometimes, as here. By a kind of sleight of hand, we can write a general formula like the above to refer to a concrete case: here the specific case of choosing index 3.

Translating to our problem statement: we start with a set of commitments to \(C_0, \ldots, C_{N-1}\), where the Prover is assumed to have jumbled the order, and hidden the index of the commitment-to-zero. Suppose that the real (hidden) index is \(l\). Then we can make the, again deceptively obvious, statement:

\(\sum_0^{N-1} \delta_{i, l}C_i = C_l\)

and if we can prove that that is a commitment to zero, then we are done.

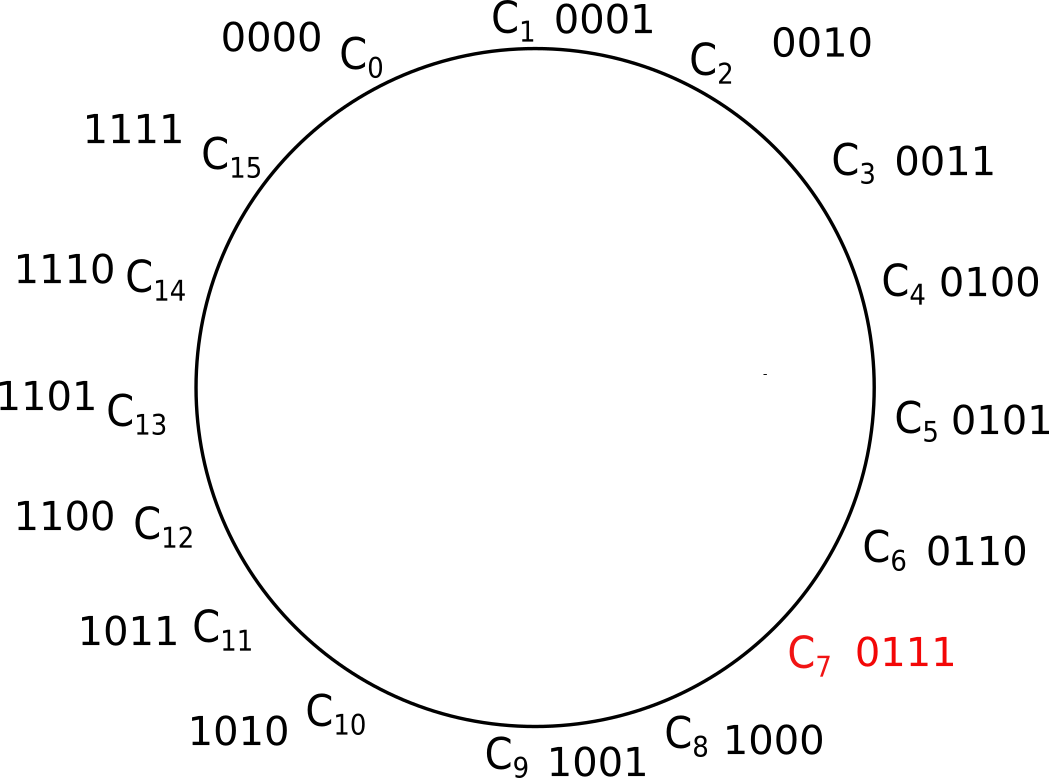

Image illustrates an example of 16 commitments with the true signing index at 7, and the indices in binary, i.e. decomposed into bits.

Now we find a way to reconstruct that term \(\delta_{i, l}\) into something that refers to the bits of \(l\):

\(\delta_{i,l} = \prod_j \delta_{i_j, l_j}\)

... where \(i_j\) is the j-th bit of \(i\). The above is obviously true because if two numbers are equal, *every* one of their bits must be equal. So the product is 1 if they are all the same, and 0 in any other case.

Remember in all this:

\(i\) and \(l\) are *indices*, not actual commitments or secrets. So if we have 16 commitments, and remember our goal is to prove that one of the 16 is a commitment to zero specifically, we might as Prover know that it's the 7th commitment. So in that case \(l\) would be:

0111

For simplicity of algorithm, we'll only consider values of \(N\) that are powers of 2, but you could always pad a list out with random commitments to fit that criterion if needed.

After assembling the randomized list (in our fake example, with the commitment-to-0 at index \(l=7\); so that is \(C_7\)), the next thing the Prover does is to follow our pre-existing algorithm for commitments to bits. That is, he takes the value 7 and makes commitments \(c_1, c_2, c_3, c_4\) to each of the bits of the number 7. (Notice we are using lower case \(c\) here to distinguish these commitments, from the original set that form part of the problem statement).

As before, the Σ-protocol to prove that all of these commitments are to bits, can be done in parallel and looks like:

Each bit is committed to with a randomised \(r\):

\(c_{l_j} = (l_j)G + r_jH\)

... and the corresponding "blinder" amount \(a\) for the bit, has to be committed with its own hiding value \(s\):

\(c_{a_j} = (a_j)G + s_j H\)

And as before, we need a special commitment to \(al\):

\(c_{a_j, l_j} = (a_j l_j) G + t_j H\)

I realise that this is complicated but we're so far following the exact steps of the previous "commit to a bit" protocol, just one for each index \(j\). These three values \(c_{l_j}, c_{a_j}, c_{a_j, l_j}\) are all sent in the first step of the sigma protocol, to the verifier (in the interactive version), who responds as usual with a single scalar \(x\) as challenge.

Note however that we missed one extra commitment that the Prover sends, per bit. This one will be explained in a moment.

The first component of the response is, again, as before, and specifically proves that all of these commitments are to values 0 or 1, i.e. they are, as promised, commitments to bits:

\(f_j = l_j x + a_j,\ z_{a_j} = r_j x + s_j,\ z_{b_j} = r_j\left(x-f_j\right) + t_j\)

; where \(j\) is the bit-index. Again, these terms are exactly as for commit-to-a-bit. And again, we are missing one extra term in the response which will be explained below.

Reconstructing the Kronecker delta term

We've proved that our list of sent commitments \(c_j\) where \(j\) runs from 1 to \(n\) where \(n\) is the number of bits (note: \(N = 2^n\) according to our statement earlier), are commitments to bits, but we need to prove that \(C_l\) is zero also, without revealing which index is \(l\). To do that, remember that our plan was to prove that:

\(\Sigma_0^{N-1} \delta_{i, l}C_i\)

commits to zero.

As a start, consider what happens if the verifier just multiplies together all the responses \(f_j\) for each bit-index; is that somehow related to \(\delta_{i,j}\)?:

\(\prod_j f_j = \prod_j (l_j x + a_j)\)

This is a polynomial of degree \(n\), where the highest degree term has coefficient which is the product of all the bits of \(l\). This gives us a clue. We already observed that:

\(\delta_{i,l} = \prod_j \delta_{i_j, l_j}\)

so if we write different functions of \(f_j\) for the case when each bit of \(i\) is 1 or 0, we can convert that highest order term into precisely \(\delta_{i,l}\):

(This point is pretty confusing: remember to think from the point of view of the Verifier. They will look at the sent values \(f_j\), knowing that each one of those random responses relates to one bit of the index \(l\), where \(l\) is an index into the list of the set of commitments \(C_0, \ldots, C_{N-1}\); so they can manipulate those responses with respect to every index \(i \in 0 \ldots N– 1\) - which is indeed what is needed). We define:

\(f_{1,j} = f_j = l_jx + a_{j} = \delta_{1, l_j}x + a_j\)

and

\(f_{0,j} = x - f_j = (1-l_j)x-a_j = \delta_{0,l_j}x - a_j\)

(Notice how that last term \(x - f_j\) is exploiting a very similar pattern to 'commit to a bit': \(x-f\) gives us the \(1-x\) type polynomial factor we need (here, it allows us to make another Kronecker delta.)

The verifier chooses the former if \(i_j\), that is, the bit of the current index \(i\) is 1, or the second if it is 0. Let's suppose the verifier then multiplies all these values together. For concreteness, imagine we are considering index \(i=9\), which in bit decomposition is:

1001

Then \(i_1 = 1, i_2 = 0, i_3 = 0, i_4 = 1\).

That means that the four values of \(f_{i_j,j}\) for \(i=9\) are, in order: \(\delta_{1, l_1}x + a_1, \delta_{0,l_2}x - a_2, \delta_{0,l_3}x - a_3, \delta_{1, l_4}x + a_4\). (Though this is implicit: the verifier must of course use the forms \(f_j, x - f_j\) as above).

In this case, since at least one of the bits of 9 is different from the bits of 7 (in fact, 3 of the 4 bits are different), it means that at least one of the terms \(\delta_{i_j, l_j}\) is zero, which we'll see below, is all that matters.

So, using this notation, the product at each index \(i\) becomes:

\(\prod_j f_{i_j,j} = \prod_j \left(\delta_{i_j, l_j}x \pm a_j\right)\)

(the shorthand \(\pm\) here means of course to choose the sign based on the value of the bit \(i_j\)).

The point is that the leading coefficient of the polynomial on the right is now exactly what we want; it's \(\delta_{i,l} = \prod_j \delta_{i_j, l_j}\). That means that the sum:

\(\sum_{i=0}^{N-1} \left(\prod_j f_{i_j,j}\right)C_i\)

... is a sum of commitments to polynomials as just described; and for each of those polynomials, the leading coefficient is exactly \(\delta_{i,l}\); which means that if we could ignore all the other terms in the polynomials, the overall commitment would be precisely \(C_l\), and so it will be a commitment to 0 as intended, if the Prover is honest (and the proof is sound!).

Ignoring the other terms in the polynomials

The above reasoning has led us to consider, for each each commitment index \(i\), a polynomial which we'll now call \(p_i(x)\):

\(\prod_j f_{i_j,j} = p_i(x) = \prod \left(\delta_{i_j, l_j}\right) x^n + \sum_{j=0}^{n-1}q_{i,j}x^n\)

where \(q\) are the coefficients of this polynomial \(p\). Writing them out is tedious, but they are calculable easily enough for the Prover, by just multiplying out the terms \(\left(\delta_{i_j, l_j}x \pm a_j\right)\) as explained above. Here is an example from my own Python proof of concept:

for i in range(len(ring)):

# first, get the bits i_j of i:

ibits = bit_decomp(i, bits)

# we need to recall:

# we are forming the product of f_{j,i_j})

# f_j,1 = delta(1, l_j) * x + a_j

# f_j,0 = delta(0, l_j) * x - a_j

pi_fij = [Scalar(1)]

for j in range(bits):

if ibits[j] == 0:

mult = (Scalar(delta(0, lbits[j])), Scalar.from_bytes(a[j])*Scalar(-1))

else:

mult = (Scalar(delta(1, lbits[j])), Scalar.from_bytes(a[j]))

pi_fij = poly_mult_lin(pi_fij, *mult)

where the function poly_mult_lin is defined as:

def poly_mult_lin(coeffs, a, b):

""" Given a set of polynomial coefficients,

in *decreasing* order of exponent from len(coeffs)-1 to 0,

return the equivalent set of coeffs after multiplication

by ax+b. Note a, b and all the returned elements are type Scalar.

"""

coeffs_new = [Scalar(0) for _ in range(len(coeffs)+1)]

coeffs_new[0] = a * coeffs[0]

for i in range(1, len(coeffs_new)-1):

coeffs_new[i] = b*coeffs[i-1] + a* coeffs[i]

coeffs_new[-1] = b*coeffs[-1]

return coeffs_new

Here it's done the most natural way, i.e. iteratively, by multiplying the polynomial by a linear factor each time. Presumably there are more efficient ways, too.

Now we have all these polynomials: \(p_0(x), p_1(x), \ldots p_{N-1}(x)\). For efficiency's sake you don't want to send commitments to all of them. Remembering that the Prover receives from the Verifier an unpredictable \(x\), which is used as input to the polynomial, the most efficient thing we can do is commit to the sum of the coefficients of each power of \(x\). So if we start with (still using \(n=4, N=16\), and \(l=7\) for simplicity):

\(p_0(x) = \delta_{0,7}x^4 + q_{0,3}x^3 + q_{0,2}x^2 + q_{0,1}x^1 + q_{0,0}\)

\(p_1(x) = \delta_{1,7}x^4 + q_{1,3}x^3 + q_{1,2}x^2 + q_{1,1}x^1 + q_{1,0}\)

...

\(p_7(x) = \delta_{7,7}x^4 + q_{7,3}x^3 + q_{7,2}x^2 + q_{7,1}x^1 + q_{7,0}\)

...

\(p_{15}(x) = \delta_{15,7}x^4 + q_{15,3}x^3 + q_{15,2}x^2 + q_{15,1}x^1 + q_{15,0}\)

The leading terms with \(\delta_{i,l}\) appear as explained in detail above. Of these, only the index 7 of course "exists" since all the other Kronecker delta values are zero. However because of the application of powers of \(x\), we group the remaining terms by those powers, mutliplying them by each commitment, and taking vertical slices in the above table:

\((q_{0,3}C_0 + q_{1,3}C_1 + \ldots + q_{15, 3}C_{15})x^3 +\)

\((q_{0,2}C_0 + q_{1,2}C_1 + \ldots + q_{15, 2}C_{15})x^2 +\)

\((q_{0,1}C_0 + q_{1,1}C_1 + \ldots + q_{15, 1}C_{15})x +\)

\((q_{0,0}C_0 + q_{1,0}C_1 + \ldots + q_{15, 0}C_{15}) +\)

To achieve the zero knowledge property, each of the rows above (each is *one* commitment; remember the homomorphism) has an extra blinding term, committing to zero, added to it (i.e \(0G + \rho_j H\)).

So to summarize, the "housekeeping term", which will be subtracted, for each bit \(j\), from the main term \(\sum_{i=0}^{N-1} \left(\prod_j f_{i_j,j}\right)C_i\), will be:

\(\left(\sum_{i=0}^{N-1} q_{i, j}C_i\right)x^j + \text{Comm}(0, \rho_j)\)

Summarizing the Prover's commit step

So to complete this task the prover must send, one per bit of the number of bits of the index:

\(c_{l_j}, c_{a_j}, c_{a_j, l_j}, c_{d_j}\)

where \(c_{d,j}\) is defined as: \(\sum_i (q_{i,j}C_i + \text{Comm}(0, \rho_j)\)

(There is a point of confusion re: the polynomial has \(j+1\) coefficients for \(j\) bits, not only \(j\). This is a detail that can be tidied up easily.)

The usual Fiat Shamir issue

To make the protocol non-interactive, we derive \(x\) from a hash function with:

\(x = \mathbb{H}\left((C_i \forall i), (c_{l,j}, c_{a,j}, c_{l_j, a_j}, c_{d_j} \forall j)\right)\)

i.e. the conversation transcript, including the initial problem statement. Obviously in practice certain other things may be included e.g. domain separation tags.

The response

This simply precis-s what we've already done. The prover sends, for each bit \(j\):

\(f_j,\ z_{a_j},\ z_{b_j}\)

and 1 additional term: \(z_d = rx^n - \sum_j \rho_j x^j\), which arises from the fact that the Verifier can only verify a commitment to zero if it has the corresponding blinding value; \(z_d\) is that blinding value, as you can check algebraically, from the above.

The Verification operation

Things only tie together cleanly when you see what the Verifier can do.

First, the verifier checks that the commitments to \(l_j\) are indeed commitments to bits; he uses exactly the algorithm of the previous blog post, once per bit. If this fails the proof is invalid because it requires that every committed value is 0 or 1.

Then, they calculate:

\(\sum_{i=0}^{N-1} \left(\prod_j f_{i_j,j}\right)C_i - \sum_j c_{d_j}x^j\)

Remember that we discussed that finding \(f_{1,j}\) and \(f_{0,j}\) can be done by the verifier using the expressions \(f_j\) or \(x-f_j\), respectively, for each value of \(i\).

As we have explained, the right hand term removes all the terms from the polynomials except the leading terms, and they are all zero except for the term at the right (secret) index. As the honest Prover knows, this is in fact a commitment to zero. The blinding value is \(z_d\) and so all the verifier needs to do is to check if the above actually equates to a commitment:

\(\text{Comm}\left(0, z_d\right)\)

If this check passes, we are done; the Verifier has validated that the Prover knows an opening of *one of* the commitments \(C_i\) which is a commitment to zero, but not which.

Scalability

The main point of course is that there is a proof transcript per bit. For each bit, we need to send 4 public keys (Pedersen commitments are single keys), and 3 scalars. In addition we need to send one more scalar (\(z_d\)). This results in a rough size estimate in bytes of \(32 \times 7 \times \text{log}_2 N + 1\).

Computing and verifying costs I leave as an open question; it's clear that they are more than the simpler, linear type of ring signature, but it's notable that the big cost is in computing what are commonly known in the literature as "multi-exponentiations" (an extremely confusing term in ECC since it refers to repeated scalar *multiplications*, i.e. expressions like \(a_1C_1 + a_2C_2 + \ldots a_nC_n\)). There has been extensive research into optimising these over the years, as I understand, and a number of algorithms are known.

Weren't we supposed to be talking about ring signatures?

The above extremely clever algorithm for proving "I know an opening to 0 of one of these commitments" translates trivially into "I know the private key of one of these public keys". Consider that if \(P_l = x_l G\) then we can "interpret" that as a Pedersen commitment to zero constructed as: \(C_l = 0H + x_l G\).

So that means to create a spontaneous ring signature on 2048 keys, I just have to treat all of them as commitments to zero of that same type; the above protocol works fine when all the non-signed indices happen to be commitments to zero, also. Because neither the prover nor the verifier needs to know the openings of those other commitments and they could literally just be random curve points; it would work the same. So there is nothing to do; this is a ring signature algorithm of the simplest "spontaneous" type, with perfect hiding and computational soundness (inherited from the Pedersen commitment itself).