The fallacy of agency

Contents

- Introduction

- Agents on the savanna

- Accidents and design

- The fallacy of agency

- It's not a fallacy if they're really out to get you

- Agency-less agents in the P2P revolution

Introduction

The falcon cannot hear the falconer;

Things fall apart; the centre cannot hold;

Mere anarchy is loosed upon the world,

The blood-dimmed tide is loosed, and everywhere

The ceremony of innocence is drowned;

The best lack all conviction, while the worst

Are full of passionate intensity.

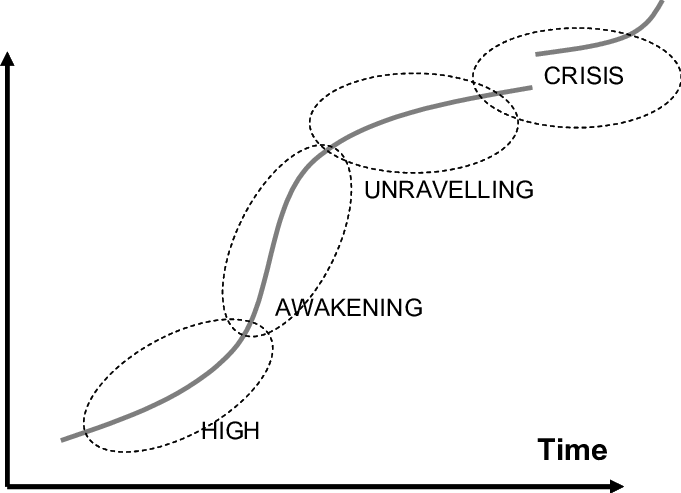

In 1919 Yeats thus lamented a collapse of order. This is the normal view of mankind since civilization became embedded; a falconer is needed. Too many falcons too far from the falconer will basically kill us all. And the falcons always seem painfully too far away. The widening gyre in which the falcons fly symbolizes the cyclical view of history, which is deeply embedded in human thought, modern examples include the so-called Fourth Turning of Strauss and Howe:

... but, you'd be amazed to what extent, every generation for hundreds of years at a time, are magically always in the 4th turning and never the 1st, 2nd or 3rd! Civilization is always on the brink of collapse, in our minds. It's fair to say Yeats had more reason that most in 1919 (WWI, Russian revolution, Irish revolution...) to take this view, but still the point stands. We are magnetically attracted to the concept of maximum drama. As the journalists say, if it bleeds it leads, because that apparently is how the human mind works.

Agents on the savanna

Humans survived and ultimately thrived more than any other species on the planet. It's generally considered an obvious fact that this was due to our brain development, but there is tremendous controversy about how we got there, i.e. what caused that huge increase in brain "power" crudely (and specifically, the cortex). Was it standing upright? Opposable thumbs? Different social hierarchy? Hunting large prey? Eating meat? Discovery of fire or tools? Or some combination of the above or just a fluke? We won't focus too much on this fascinating topic here, but only on the question of how exactly our brain functions differently from the other apes and animals.

And so we come to the first major turning point of this essay:

Hypothesis: the birth of abstract thought in humans came from agent based modelling, and that this led to what we now call consciousness, through the modelling of one's own physical self as an agent.

This hypothesis is quite distinct from the truly audacious The origin of consciousness in the breakdown of the bicameral mind by Julian Jaynes, but it shares the aspect of a sense in which we must have an explanation of the distinction of conscious awareness between humans and other animals which is not just quantitative but also qualitative.

In order to take down large prey, humans had to organize and interact at a more complex level than just "go towards the desirable thing" and "run away from the dangerous thing". For say 10 humans to take down one large beast, they had to coordinate in a way the did not, to forage fruits, nuts etc. Even most apes do not generally share food like homo sapiens does, each ape largely (with obvious exceptions - child rearing) feeds themselves. For example gorillas and orangutans generally don't do it. Chimpanzees apparently do it specifically when hunting for meat but not so much at other times. Other species that hunt in somewhat similar ways (for example dolphins) also exhibit exceptionally high cognitive ability, though not quite as much as humans.

So, to coordinate a hunt against dangerous prey, homo sapiens needed to communicate (language), and to plan (sense of past/future and, eventually, story telling).

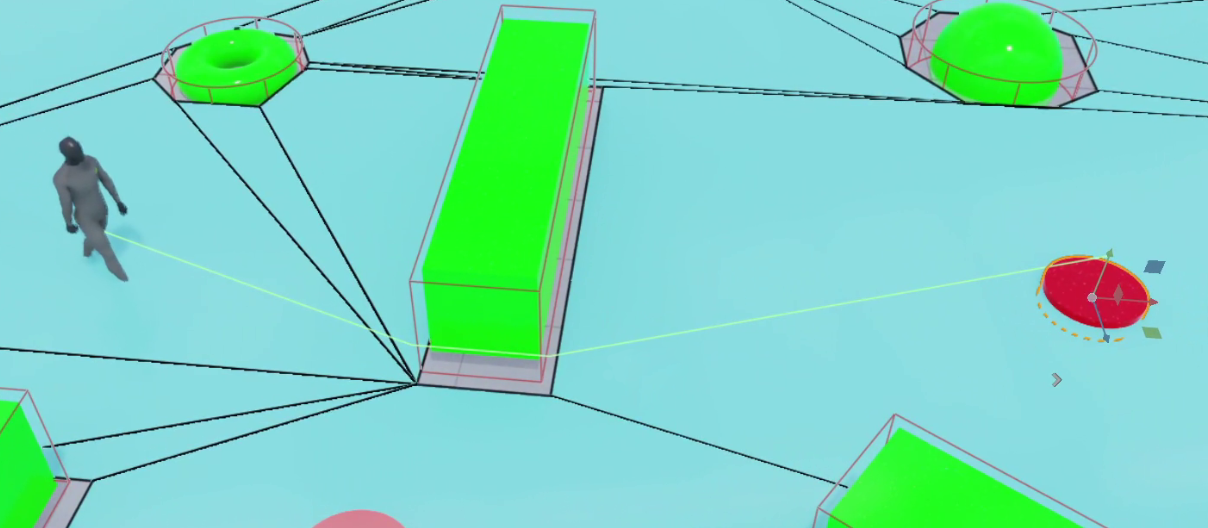

The rudimentary abstract thinking involved resembles a phenomenon in the recent modern world: rudimentary AI as was seen in video games:

Pictured is a very basic illustration of what's termed in the game dev world a "navmesh", in which game characters are given paths that they can traverse to achieve goals. Common ways this is done in real games include the rudimentary "finite state machine" to more sophisticated decision trees and "GOAP" - goal-oriented planning. We can see that there are essentially two elements of this - just the isolation of an "NPC" (non player character) as a distinct identity, and then that NPC having motivations, i.e. goals.

A lot of early abstract thinking was probably of that type: to get in position to achieve a goal in a hunting party, you must traverse this path at this time and in the pursuit of doing this action (the goal). Just as important as pathing and more general planning, was taking into account other actors as another kind of "agent". Simplistically imagine 10 hunters and 1 prey, the primitive human must create a mental model encompassing to some extent the actions both present and future, of all 11 of these agents, with 2 of the 11 (yourself, and the prey) having a very special status compared to the rest. Survival selected for humans that could do this in a very refined way rather than just a vague awareness that others exist. Things like dance rituals and sports nicely illustrate how, over time, we evolved to develop extraordinarily precise internal spatial modelling of a bunch of cooperative agents; we can postulate that this started with hunting.

So in this view of the world, the bedrock of human conscious thought (the stuff we do with the cortex, sometimes called "rational thinking" as opposed to just fight or flight or sex) is an internal model where a bunch of agents or actors do actions in some interplay with each other. The world exists as a passive backdrop to the actions of these agents.

Accidents and design

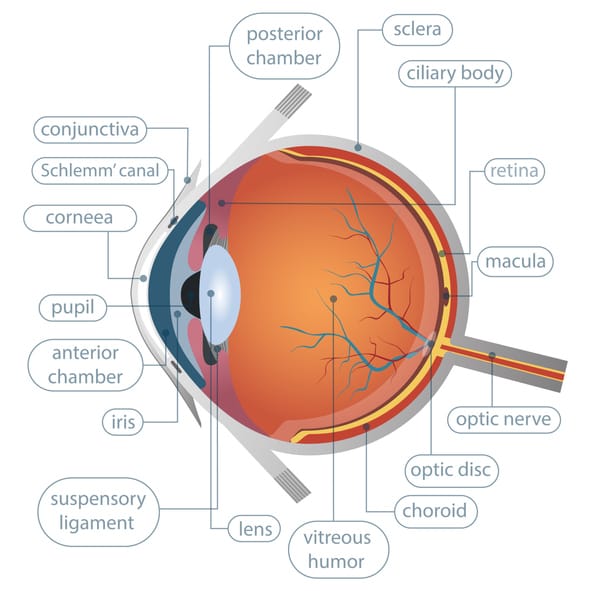

In this view of the world, there is a clear division between things that happen because an agent chooses to cause it to happen and other events. Those latter events are in some sense "accidents" (see act of God; a name which will soon seem ironic). And in this version of the world, accidents are an aberration, they would certainly not lead to something like this:

(for those few readers unaware, the principal argument in favor of intelligent design has always been that something as sophisticated as the human eye is completely implausible to explain with "accidents" - and therefore it can only be explained by agency - in their view, an all powerful "Designer" agent).

The reason natural selection is a good argument and intelligent design a bad one has basically two facets:

- because the former is parsimonious (or short and economical if you don't like .. non-parsimonious terminology) and the latter is not only not that, but is also, like so many bad arguments, ultimately circular. Quis custodiet ipsos custodes becomes Quis creat ipsos creatorem - anyone truly religious may roll their un-designed eyes at this, but that's only appropriate in a truly religious context - in this context, the point should be clear: appealing to a "designer" to explain complexity, explains nothing at all.

- because the former has Popperian properties - it can predict falsifiable things (see e.g. the fossil record), and the latter cannot. To trigger certain factions in Physics, this is also why the many worlds interpretation of Quantum Mechanics is, fundamentally, religion and not science.

The fallacy of agency

Now, natural selection might be parsimonious but that doesn't help if you have no causal mechanism to explain it. Its inner mechanisms (the way in which random errors in the copying mechanism that can lead to low entropy structures in an energy bathed environment (see the Second Law)) are not very accessible, in their details, but they are intuitive (e.g. for a long time I just assumed that errors in DNA were mostly a function of cosmic radiation; I was wrong about that "mostly", but I did know that the exact cause of the errors doesn't matter; all you need is a copying mechanism and random errors), if your mode of thought is not dominated by the fallacy which is:

- (fallacy) a complex event or structure required an agent to create it

The real reason that to this day many humans who are exposed to the idea of natural selection don't "buy" it, is because it violates this savanna-type agent-based thinking; which would of course never be vocalized in the form of the above, ridiculously abstract, sentence; this is an entirely unconscious bias. If it were vocalized, it might be in a form like: "If something does something, in a complicated way, it must be because something or someone wanted it to".

If you have a friend who wants to understand natural selection better, I can recommend Dawkins' brilliant book the Blind Watchmaker.

The fallacy of agency is a close cousin of pareidolia; it's a tendency to see things that are not there, because our perceptual apparatus is built to see that thing - except it's a thing seen with an internal "abstract" eye.

It's not a fallacy if they're really out to get you

Agent-based thinking is incredibly pervasive, and while useful, often leads us down dubious cognitive paths. To illustrate that, let's consider the troublesome idea of "conspiracy theories". Before looking at them, let's note that people do conspire, as has been noted in many situations, e.g. as Adam Smith noted in the Wealth of Nations:

"People of the same trade seldom meet together, even for merriment and diversion, but the conversation ends in a conspiracy against the public, or in some contrivance to raise prices."

(though this has been taken horribly out of context; he was talking about trade guilds). The ability to do this is a function exactly of the ability to coordinate, which itself is a function of scale: it works at small ones and not at big ones. What works for 10 participants in a hunt does not work for 100,000 inhabitants of a city. That cabals and conspiracies tend to not work, long term, at large scales, ought to be obvious, really, but if you need examples just look at the New York taxi medallion scenario's ultimate failure to Uber, or OPEC's failure to control oil prices due to (often blatant) overproduction by members, among a billion other examples - the tendency is always there, but the centre, as they say, "cannot hold". Monopolies exist, as anyone who used a PC in the 90s could attest, but they also are impermanent, as seen by anyone who continued to live into the next decade (though, this is the "everyday" way of seeing monopoly; I do have a lot of sympathy for Mises' alternative point of view - many of these examples last a lot longer than you might expect due to government regulation).

But whilst the topic of cabals and monopolies (quasi- or otherwise) is an interesting one, it is not really what the fallacy of agency is about. Here the problem is not so much that agency exists, as the fact that agency doesn't scale very well. We'll talk more about that, later.

But in its pure form, the fallacy of agency is about assigning agency where none exists, and that's what the really .. fun .. conspiracies are all about.

Hurricanes hardly happen - by chance

A beautiful, if slightly disturbing, recent example of the latter is the case of allegedly man made hurricanes.

As the meteorologists in the article note:

“For me to post a hurricane forecast and for people to accuse me of creating the hurricane by working for some secret Illuminati entity is disappointing and distressing, and it’s resulting in a decrease in public trust,” says Cappucci. He says he hasn’t slept in multiple days and is exhausted. This past week he received hundreds of messages from people accusing him of modifying the weather and creating hurricanes from space lasers.

“Something has clearly changed within the last year,” says Spann. “We know some of it is bots, but I do believe that some of it is coming from people that honestly believe the moon disappeared because the government nuked it to control the hurricanes, or that the government used chemtrails to spray our skies with chemicals to steer [Hurricane] Helene into the mountains of North Carolina.”

, modern social media might have made these kind of mad ideas more viral, but make no mistake: this type of thinking has always been common; it certainly was in the television and newspaper age, too; though I think it is fair to tie it to media.

So we've discussed, if briefly, two extremes: large scale cabals which do exist, but which are flaky because conspiracy at scale is not robust; and then the flat-out batshit insane conspiracy "theories" which only occupy human brains because of this fallacy of agency. But a lot of the interesting cases sit somewhere in the middle.

The 9-11 attacks capture the nuance very well here, in my opinion. Al-Qaida in its nature was a kind of conspiracy - though I quite loathe the loaded term "terrorism", it very much describes what Al-Qaida did outside of their homelands - they did actually set up cells, with covert communication and planned elaborate attacks to instil fear into their enemies - they were doing this already in 1993 (literally attacking the same World Trade Center, by the way). In the dramatically successful attacks of 2001, there is a surfeit of evidence of who these people were and their connections to Saudi Arabia, Afghanistan and specifically the Al-Qaida organization. If you want to go deep into causal connections, it isn't hard to realize that (a) this kind of terrorism is a function of Western powers' interference in the middle East - even if it is also a function of religious extremist views - and (b) that even Al-Qaida in its very essence is kind of a creation of Western powers (I can recommend Curtis' the Power of Nightmares part 3 for substantial detail on this).

But it's instructive that the people who ended up being labelled "conspiracy theorists" about this topic did not focus on the above substantial evidence at all! They instead hypothesized:

- The collapse of the twin towers was a planned demolition and thermite was used to execute it

- The collapse of WTC-7 (which many people, including myself, watched happening in real time on television) was also a planned demolition

- There was no plane that crashed into the Pentagon

- It was a Mossad operation

- It was a Saudi government operation

- It was a US government or CIA operation

- US authorities did not plan it but saw the plan and let it happen

By 2008, the US government itself was even publishing webpages to try to dispel these, which is a pretty strong indicator of how seriously they were taken. There were many others, of course. Also, I am not saying that these are all wrong, or that they are partially right or wrong. What's notable is how much this all is marked by the tell-tale signs of the fallacy of agency: "buildings don't fall like that in such a clean way just by accident!".

Agency-less agents in the P2P revolution

As the digital realm took hold and became central to civilization over the last 40 or so years, it offered new forms of organization. In this new realm, individual or small scale human agency is becoming even less important than its already reduced role in the agricultural and then industrial ages. Nothing embodies this shift more radically than so-called "peer to peer" (P2P) technologies.

P2P is at least culturally, intimately linked with the free software movement; both represent a rejection or at least push-back against corporate hierarchies.

Richard Stallman is an incredibly important figure in the development of software over the last half century. He championed, extremely early on, in the mid 1980s, the idea that users should have rights in a world of software developed by corporations; so what I mentioned above as the "free software movement" is what he defined as FOSS or FLOSS:

- Freedom 0: The freedom to run the program as you wish, for any purpose.

- Freedom 1: The freedom to study how the program works and change it to make it do what you wish.

- Freedom 2: The freedom to redistribute copies so you can help others.

- Freedom 3: The freedom to distribute copies of your modified versions to others.

The emphasis implied here - on how power taken away from the users could be problematic - proved incredibly prescient across modern 21st Century life, extending to things like tractors.

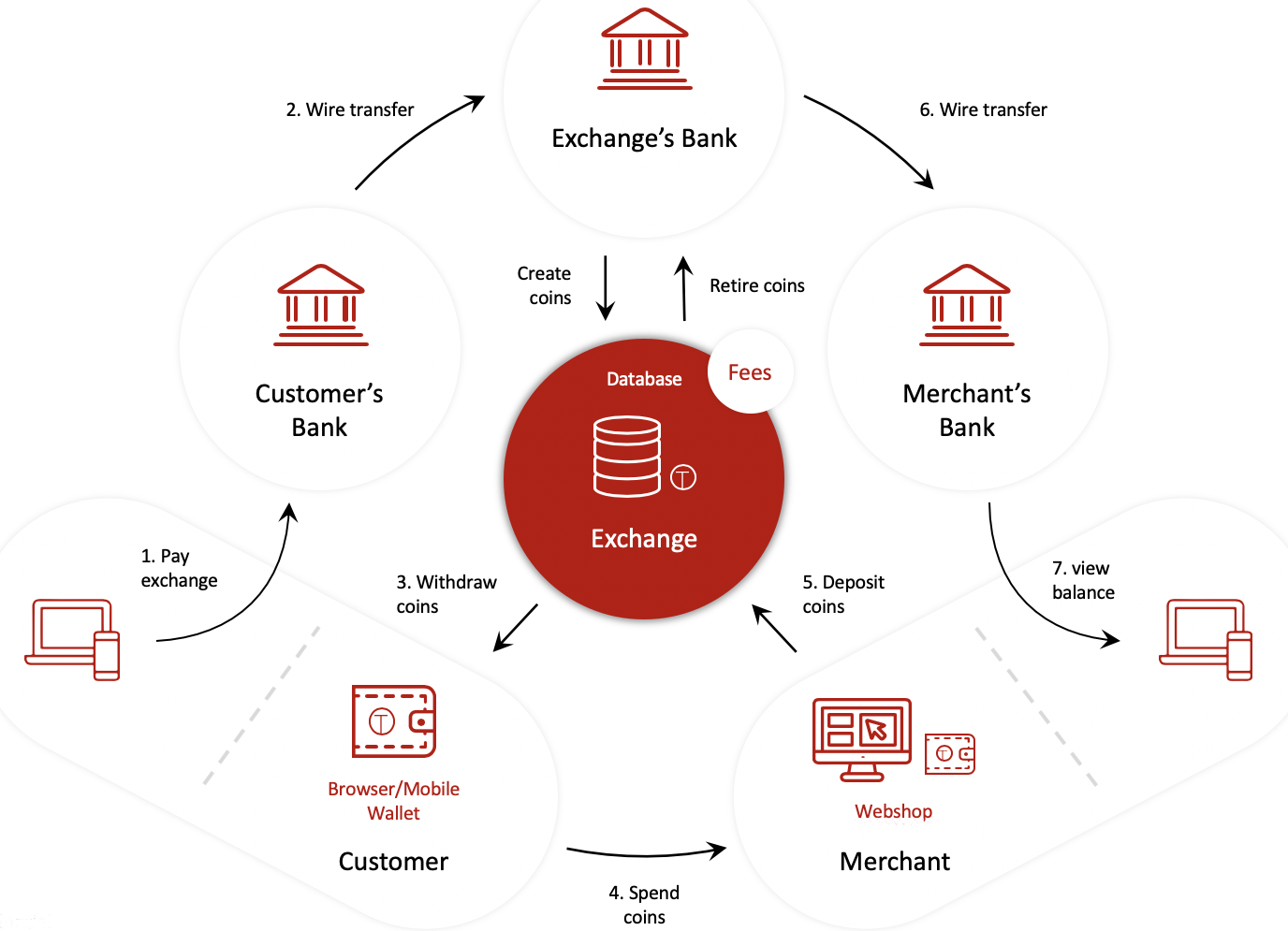

Here there is a big, and explicit, focus on the role of user. This comes into sharp focus when you see Stallman's reaction to Bitcoin. A P2P technology like Bitcoin cannot intrinsically give lesser rights to a "user" class of agent if it doesn't have such a class embedded into it, but Stallman's skepticism about the technology focuses on user privacy. This is best illustrated in how he describes his own preferred scheme, GNU Taler:

In any case, the GNU project has developed something much better, which is GNU Taler. GNU Taler is not a cryptocurrency. It is not a currency at all. It is a payment system designed to be used for anonymous payments to businesses to buy something. It is anonymous through a blind signature for the payer. However, the payee has to identify itself for every purchase in order to get money out of the system. So the idea is you can use your bank account to get Taler Tokens, and you can spend them and the payee won't be able to tell who you are.

So, if he dislikes Facebook for its surveillance, which is aided by its being non-FOSS proprietary software, it's not strange for him to dislike the same outcome (potential or actual surveillance of Bitcoin transactions) with a different root cause (since Bitcoin as software is, in fact, resolutely in line with his FLOSS ideals).

(To be absolutely clear, this author considers Stallman's "much better" assertion here as lacking in understanding, impractical and also I consider him wrong in exactly the same way that Chaum was in the 80s, thinking that blind signature based ecash, is "the" solution for online payments with privacy, even though it relied (in his model) on the banking system and/or centralization. But this is very much another topic!).

I see this mode of thinking as the same fallacy in a different aspect; something I think which is at the root of much "left"-leaning (ugh "left" and "right" politics is far too loaded and vague of a distinction, but let's stick with it here) political ideology. The idea is not so much "there is some powerful agent directing all this" as "there needs to be a powerful agent created to direct this otherwise it'll never work". That's the savanna brain thinking! And here it's coming up with exactly the wrong answer!

- the development of P2P technologies starting with Tor and Bittorrent (c. 2000-2002) and continuing to Bitcoin and its descendants, during the 2000s, creates a narrative in stark contrast to the unconscious assumption of the fallacy of agency - you do not need a central actor or set of actors (we'll handwave Tor's directory nodes for a second) to make such complex dynamic systems work; what's more, systems like this have an astounding robustness and last for years precisely because of the absence of any such central agent.

As emphasized by the final clause here, the negation of the fallacy is, here, not just sufficient but also necessary. There is no clearer example than the historical development of digital cash - every one of the earlier systems like digicash, egold and Liberty Reserve failed because they had an agent/actor controlling them.

The Bitcoin whitepaper itself, in one of its few somewhat evocative passages, makes the crucial point:

"The network is robust in its unstructured simplicity. Nodes work all at once with little coordination. They do not need to be identified, since messages are not routed to any particular place and only need to be delivered on a best effort basis."

To put it another way, Bitcoin has no agents. It has quantifiable work instead. And that, more than anything else, is the reason why it has been running uninterrupted for 15+ years.

Tor and bittorrent, meanwhile, do not even have that "work" binding agent, and actually do have routing - so they are quite a bit more fragile (see recent changes in Tor that illustrate this point), but yet still succeed at an surprising level just by the value experienced in participating in these "agency-less" systems.

("agency-less"? - yes, all of these systems have agents - nodes and temporary nyms - by their nature. But, to the extent they adhere to a pure flat structure, each node is dispensable, each node is dumb in terms of action - it simply follows a protocol, and yet the emergent function is extremely powerful - like the cells of a human eye).

But why is P2P tech special? Should everything just be anarchy?

With exception of some anarcho-capitalists, I guess, most readers will not be very convinced by the idea of taking these examples of robustness in technology and applying them to the world at large (though anarcho-capitalism seems to be making some progress in certain parts of the globe). That's reasonable. So in what kind of situation is it important to be aware of and remove this "fallacy of agency" bias?

Dunbar reprises his role

In an earlier essay I had occasion to wheel out Dunbar's number, and it's unsurprisingly still very relevant here. We noted how in the case of conspiracies, they start to lose plausibility at scale, for this same reason: you might have 5 or 10 or perhaps at extremes 50-100 hunters going after the woolly mammoth; you might, similarly, live in a village of 100. But not 1000+; those numbers were reserved for the advent of agriculture. And so to this day humans can "manage" agency within groups of 10-100, can remember the names of perhaps 100 acquaintances but none of that thinking ever really applies at any larger scales such as the ones we are used to in the digital 21st century.

So achieving desirable societal outcomes - let's take a slightly contrived one, like, "society needs to use less energy because we have a problem sourcing fuel" - the fallacy of agency way of thinking is to: create a "fuel czar" that directs government agencies to uh, be more efficient or something. To create an education program. To discuss it etc. etc. Whereas in reality, problems like this occurring at scale (and not in a village) are immune to human agents' direction unless that direction is backed up with strong incentives. It's not a matter of preferring a more human-coordination based approach, or preferring a more "cold", incentives-based approach. It's that the former cannot work (even though the latter can be extremely problematic sometimes), as evidenced by the now long history of Communism and its utter failure to even sustain itself (let alone bring benefit), absent nightmarish enforcement (which, again, is incentive, this time negative, not voluntary coordination).

And this to me is the ultimate ethical point of this line of thinking. It is ironically far more ethical to be cold, unfeeling and inhuman when dealing with matters at scale, while at the scale of your family and tribe the opposite is of course true. It doesn't mean individuals or groups can't make plans to build systems to achieve outcomes - after all that's how Tor, Bittorrent etc. came to be, too. The point is that they're protocols and they're not defined in terms of human agency.

In matters of the large scale, then, not only are we cogs in the machine, we must do our best to treat others as cogs in the machine and not special, in order to be appropriately ethical and to build societies that actually work for everyone's benefit.

Treating the ethics of the tribe as cross-applicable to the ethics of the nation state or similar, is a kind of ethical impedance-mismatch that is a huge source of failure and suffering in the world. And the tendency to do this is rooted deeply, I suspect, in the fallacy of agency.

In the digital realm, the agent has a name - Sybil

As a wise man once put it, "Identity is the problem, not the solution". This essay should let the reader know what is meant by that, if it was not clear before. Identities or agents' unrealness (see the Ship of Theseus, if eastern mystical philosophy or Heraclitus are not your bag) is the reason that systems dependent on them are flaky and fragile.

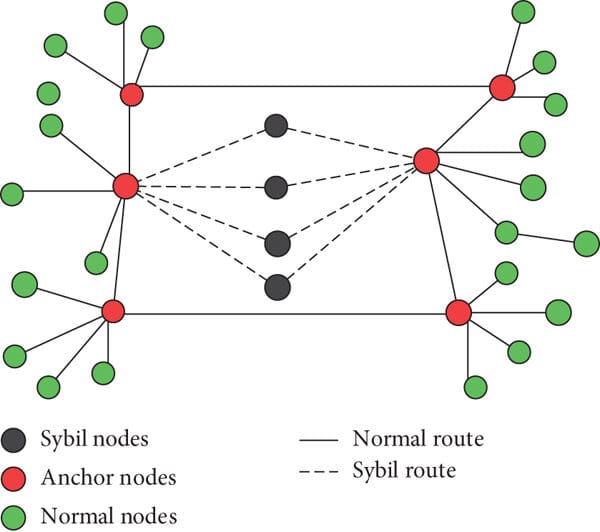

Most of the digital systems you use today, such as online banking or Netflix subscriptions, do indeed use identities, and they can't function properly without it. This struggle is exemplified by the concept of the Sybil attack.

Access to resources must be limited, for any useful distribution of resources. But, accounts cannot be limited easily without third parties enforcing some access control. But, those third parties, as we discussed, represent single points of failure that can lead to full blown collapse of systems. Agency is a construct; and its artificiality is shown most clearly when digital systems fail due to the creation of a billion agents (we often call them "bots"). This problem will grow worse as AI gets better.

The imposition of energetic cost is, to my mind, the solution to the problem of how to unleash the full potential of autonomous agents in the digital realm (this blog site is called "reyify" as a pun on "reify" to refer to this concept). The energetic cost can be imposed indirectly, however, using trustless digital cash.